How Can Democratic Society Defend Against Trump’s Social Media Blitzkrieg?

This article was produced by Voting Booth, a project of the Independent Media Institute.

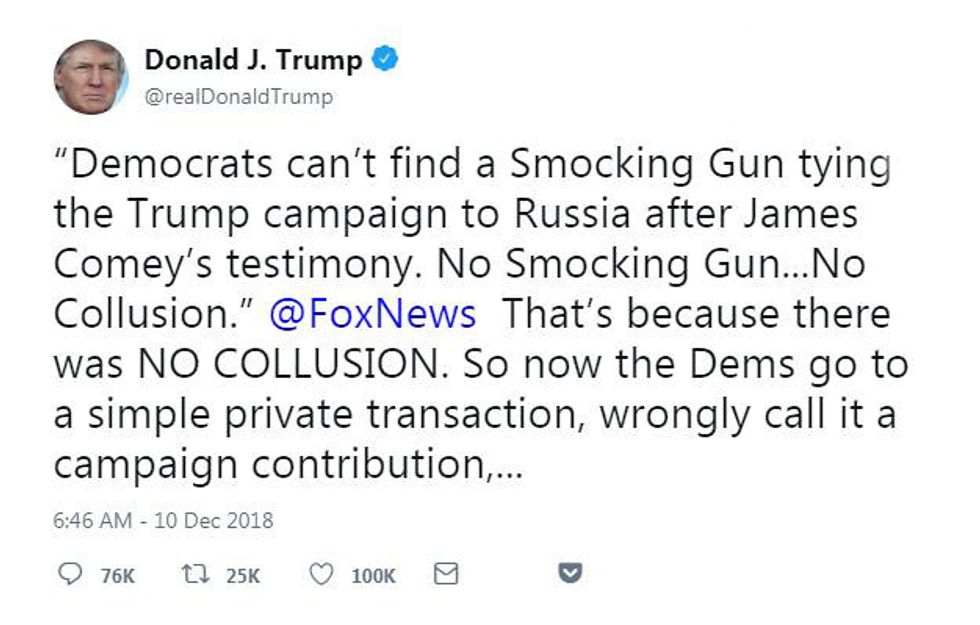

As 2020 nears, disinformation—intentionally false political propaganda—is increasing and getting nastier. Central to this disturbing trend is President Trump, whose re-election campaign and allies revel in mixing selected truths, half-truths, knowing distortions and outright lies, especially with messaging sent and seen online.

Trump’s rants about impeachment, Ukraine, the Bidens, Nancy Pelosi, the media, and any opponent abound: On Twitter, in statements to the press, at rallies, he sets the angry tone. His White House staff, right-wing media, 2020 campaign and surrogates embellish his cues. Hovering above this cultivated chaos is a larger goal, propaganda experts say, to create an omnipresent information operation driving news narratives.

Thanks to Trump, Americans have been subjected to a crash course in propaganda. When Russia used similar tactics in the West in recent years, its goals were to increase polarization, destabilize society, and undermine faith in democratic institutions, as noted in a 2019 report by Stanford University’s Cyber Policy Center. When Trump and his supporters propagandize, it is to assert themselves, smear critics and rivals, and manipulate “unwitting Americans,” according to NYU’s Stern Center for Business and Human Rights.

With the continuing rise of online media, there is no end in sight to the trend of escalating disinformation. Platforms like Facebook, YouTube, Instagram and Twitter are the most direct way to target and reach any voter. They share an architecture built to elevate, spread, and track provocative content—designed to push impulse sales. When used by political operatives, these tools favor inflammatory material and its most aggressive purveyors, namely figures like Trump and the messaging that promotes him.

Today’s presidential campaigns, led by Trump, are spending more online than on television, exacerbating disinformation’s spread. Making matters worse is that the biggest online platforms, led by Facebook and YouTube, have inconsistent standards on blocking ads containing clear lies.

Facebook will leave up political ads containing lies if they are posted by candidates. Google says it will not post dishonest ads, but ads with false claims have stayed up. Twitter will not sell political ads after this fall, but Trump’s tweets violating its rules banning abusive content will remain. In contrast, many television and cable networks won’t run the same ads. (Fox News is an exception, as its clips continue to be edited in ways that mangle the speech of Trump critics like Pelosi. His most loyal base watches Fox.)

But there’s more going on than pro-Trump forces creating ugly content that plays to partisan bias and goes viral online. Because online media platforms spy on and deeply profile every user—so their advertisers can find audiences—Trump’s campaign has used online advertising data and tools to find traumatized people and target them with intentionally provocative fear-based messages. (Its merchandise sales have similar goals.)

“This isn’t about public relations. It isn’t about online advertising. This is about information warfare,” said Dr. Emma L. Briant. The British academic and propaganda expert has documented how U.S. and UK spy agencies, militaries and contractors developed, tested and used such behavioral modification tactics—exploiting online platforms—in national elections in the U.S., in the UK, and overseas.

Briant has detailed how Trump’s 2016 campaign used personality profiles of voters (derived from Facebook data stolen) to identify anxious and insecure individuals in swing states. They were sent Facebook ads, YouTube videos, Instagram memes (photos or cartoonish images with mocking text) and Twitter posts to encourage or discourage voting. Her new book describes this effort amid a growing global influence industry.

“They were targeting people who are the most fearful in our society,” Briant said. “They were deliberately trying to find those people and send them messages, and measuring how much they would be scared by it… We are pretending that this is advertising for the modern day. It actually is a hybrid system that has fused intelligence operations with public relations.”

What Briant described about 2016 has only deepened in 2019 as more of our lives are lived and traceable online. Internet privacy has largely vanished. Digital devices record and share ever more revealing details with marketers. What worries Briant are not the sizeable numbers of people who can and will ignore propaganda screeds, but those individuals who are susceptible to the politics of blame and grievance and who might heed dark cues—such as white supremacists, whose leafleting has increased, or people taking guns to the polls.

The Democrats have not yet joined a 2020 disinformation arms race. Some media analysts have suggested that they must do so to effectively compete with Trump, especially on Facebook where the audience is now trending older and more pro-GOP than it was in 2016 (as younger people have moved to other socializing apps, including Facebook-owned Instagram, WhatsApp and Messenger).

One leading Democratic presidential candidate, Sen. Elizabeth Warren (D-MA), has gone beyond criticizing Facebook’s recent policy to leave up demonstrably false political ads. Warren posted her own false ad—saying that Facebook has endorsed Trump—to underline her point: that online media operates under looser standards than TV.

More widely, Democrats have turned to new tools and tactics to detect and to counter false online narratives—without embracing Trump’s playbook. These higher-minded tools are being used by groups like Black Lives Matter to identify, trace and respond to attacks in real time. Top party officials also believe that they can draw on the relationships between Democrats across the nation with voters in swing states to counter pro-GOP propaganda.

Whether the Democrats’ countermeasures will work in 2020 is an open question. But it is possible to trace how propaganda has grown in recent months. The formats and shapes are not a mystery; the disinformative content is a mix of online social media posts, personas and groups, memes, tweets and videos—real and forged—that feed off and often distort the news that appears on television. Like Trump’s media presence, this noise is unavoidable.

New Propaganda Pathways

Today’s disinformation, and what will likely appear next year, is not going to be an exact replica of 2016’s provocations—whether by any candidate, allied campaigns or even foreign governments.

In September, NYU’s Stern Center issued a report predicting the “forms and sources of disinformation likely to play a role during the [2020] presidential election.” Domestic, not foreign, “malign content” would dominate, it said, citing the growth of domestic consultants selling disinformation services. The Center predicted that faked videos, memes, and false voice mails (sent over encrypted services such as WhatsApp) would be the most likely pathways. Increasingly, this content has more visual elements. Reports of disinformation tactics recently used abroad affirm this forecast and add other details, such as programs that “emulate human conversation” or “outsourcing” efforts to local groups, including activists who may be unaware of their role. (Also domestically, right-wing think tanks are creating “faux-local websites” posing as regional news outlets.)

The Center’s 2020 list contrasts with 2016’s avenues, which, in addition to right-wing media epicenters such as Breitbart and Fox News, were dominated by Facebook’s pages, groups and posts, by Instagram’s images and memes, and by tweets on Twitter, including those that were robotically amplified. The Center also distinguishes between disinformation, which is knowingly false, and misinformation, which involves mistaken but still inaccurate narratives.

For the public, these distinctions may not matter. As a German Marshall Fund analysis of Russian “information manipulation” has noted, a lot of its disinformation “is not, strictly speaking, ‘fake news’ [overt lies]. Instead it is a mixture of half-truths and selected truths, often filtered through a deeply cynical and conspiratorial world view.” Examples of intentional but nuanced distortions have already surfaced in 2019. As one might expect, Trump’s allies began with his potential 2020 rivals. They smeared his opponents as part of an overall effort to rev up their base, start fundraising and shape first impressions.

Before former Vice President Joe Biden announced his bid, the most-visited website about his 2020 candidacy was put up by Trump’s supporters to mock him. Made-up sexual assault allegations were thrown at South Bend Mayor Pete Buttigieg, who is gay. Donald Trump Jr. asked if California Sen. Kamala Harris was really an “American black.”

By fall, a story Elizabeth Warren told (about how “her 1971 pregnancy caused the 22-year-old to be ‘shown the door’ as a public-school teacher in New Jersey… [leading to] an unwanted career change that put her on the path to law school and public life”) was smeared in a “narrowly factual and still plenty unfair” way, the Washington Post’s media columnist said, in a piece that noted “how poisoned the media world is.”

All these online attacks were just early salvos in a deepening information war where appearances can be deceiving, information may be false, and much of the public may not know the difference—or care. That last point was underscored by another trend: the appearance of faked videos.

A high-profile example of this troubling trend was the posting of videos on social media last spring that made Speaker of the House Nancy Pelosi appear to be drunk. The doctored footage came from a speech in Washington. The videos, called “cheapfakes,” raced through right-wing media. Few questions were asked about their origin. They were presented and apparently believed. One version pushed by a right-wing website garnered a purported 2 million views, 45,000 shares and 23,000 comments. Trump tweeted about the fake videos, which had appeared on Facebook, YouTube and Twitter. After protests, Facebook labeled the videos false and lowered their profile. YouTube removed them. But Twitter let them stand.

While those viewer numbers might be inflated, that caveat misses a larger point. Online propaganda is becoming increasingly visual, whether crass memes or forged videos. Apparently, enough Trump supporters reacted to the doctored videos that Fox News revived the format in mid-October. After Pelosi’s confrontation with Trump at the White House on October 17, Fox Business News edited a clip to deliberately slur her voice—which the president predictably tweeted. (This is propaganda, not journalism.)

NYU’s Stern Center report forecast that doctored videos were likely to resurface just before 2020’s Election Day when “damage would be irreparable.” That prediction is supported by evidence in this fall’s upcoming elections. In Houston’s November 5 mayoral election, a conservative candidate known for flashy media tactics used an apparently faked video in a 30-second ad to smear the incumbent by alleging scandalous ethics.

The Pelosi videos are hardly the only distorted narrative pushed by the GOP in 2019. By August, the Washington Post reported that Trump had made more than 12,000 false or misleading claims in office. One narrative concerned Ukraine and the Bidens—the former vice president and his son Hunter Biden.

The same right-wing non-profit that had tarred Hillary Clinton and the Clinton Foundation in 2015 was pushing a conspiracy about the Bidens and Ukraine. Four years ago, it prompted the New York Times to investigate a uranium deal with Russians that “enabled Clinton opponents to frame her as greedy and corrupt,” as the New Yorker’s Jane Mayer reported. The Ukraine accusations had not gained much traction in mainstream media, although right-wing coverage had captivated Trump. In July, he pressed Ukraine’s president to go after Biden if Ukraine wanted military aid.

That now-notorious phone call, in addition to provoking an impeachment inquiry, has ended any pretense of partisan restraint. Subsequently, the Trump campaign has put out a knowingly false video on Facebook saying “that Mr. Biden had offered Ukraine $1 billion in aid if it killed an investigation into a company tied to his son,” as the New York Times put it. That video was seen 5 million times.

But pro-Trump propaganda had already been escalating. In recent months, the ante kept rising, with the use of other tools and tactics. Some were insidious but transparent. Some were clear hyperbole. But others were harder to trace and pin down.

In July, Trump brought right-wing media producers to the White House to laud their creation and promotion of conspiratorial and false content. “The crap you think of is unbelievable,” Trump said. Afterward, some attendees began attacking reporters who are critical in their coverage of the administration, personalizing Trump’s war on the press. (In October, one attendee recycled a video mash-up he made last year that depicted a fake Trump killing his critics, including reporters, for a GOP forum at a Trump-owned Florida hotel.)

Fabricated Concern

The rampaging president video drew coverage and was seen as another sign of our times. But less transparent forms of disinformation also appeared to be resurfacing in 2019, including harder-to-trace tools that amplify narratives.

In the second 2020 Democratic presidential candidate debate, Rep. Tulsi Gabbard, D-HI, went after California Sen. Kamala Harris. Social media lit up with posts about the attack and Google searches about Gabbard. Ian Sams, Harris’ spokesman, made a comment that raised a bigger issue.

Sams tweeted that Russian bots magnified the online interest in Gabbard. Bots are computer code, acting like robots online. Their goal is generating viewers and with it, purported concern or even outrage. Sams’ tweet was the first time a presidential campaign made a comment about bots. Social media, especially Twitter, is known for bot activity that amplifies fake and conspiratorial posts. Estimates have said that 15 percent of Twitter shares have been automated by bots—or faked.

Sams’ tweet came after speculation from a new source that has become a standard feature of 2020 election coverage: an “analytics company” that said that it saw the “bot-like” characteristics,” as the Wall Street Journal put it. Their experts said that they saw similar spikes during the spring. What happened next was telling.

Harris’ staff and the Journal may have been correct that something was artificially magnifying online traffic to wound her campaign. But when tech-beat reporters tried to trace the bots, the evidence trail did not confirm the allegation, backfiring on her campaign.

That inconclusive finding highlights a larger point about online disinformation in 2020. Attacks in cyberspace may not be entirely traceable, eluding even the best new tools. The resulting murkiness can cause confusion, which is one goal of propagandists: to plant doubts and conspiracies that eclipse clarity and facts while confusing voters.

Sometimes, those doubts can resurface expectedly. In mid-October, Hillary Clinton said during a podcast that pro-Trump forces were “grooming” Gabbard to run as a third-party candidate, including “a bunch of [web]sites and bots and ways of supporting her.” (In 2016, a third-party candidate hurt Clinton’s campaign. Jill Stein, the Green Party candidate, received more votes than the margin separating Trump and Clinton in the closest swing states of Michigan and Wisconsin. That was not the case in Pennsylvania.) Gabbard rejected Clinton’s assertion that she was poised to be a 2020 spoiler, saying that she was only running as a Democrat. Trump, predictably, used their spat to smear all Democrats.

But bot activity is real whether it can be traced overseas or not. In October, Facebook announced that it had taken down four foreign-based campaigns behind disinformation on Facebook and Instagram. One of the targets of the disinformation campaigns was Black Lives Matter, which told CNN that it had found “tens of thousands of robotic accounts trying to sway the conversation” about the group and racial justice issues.

Three days after Facebook’s announcement, Black Lives Matter posted instructions for activists to defend “against disinformation going into 2020.” It asks its activists to “report suspicious sites, stories, ads, social accounts, and posts,” so its consultants can trace what’s going on—and not rely on Facebook.

Dirty campaigning is nothing new. Deceptive political ads have long been used to dupe impressionable voters. But online propaganda differs from door flyers, mailers, and campaign ads on radio and TV. Online advertising does not aim at wide general audiences, but instead targets individuals that are grouped by their values and priorities. The platforms know these personal traits because they spy on users to create profiles that advertisers tap. Thus, online platforms invite personal narrowcasting, which, additionally, can be sent anonymously to recipients.

The major online platforms created their advertising engines to prosper. But government agencies that rely on information about populations—such as intelligence agencies, military units, and police departments—quickly grasped the power of social media data, user profiling and micro-targeting. More recently, political consultants also have touted data-driven behavioral modification tactics as must-have campaign tools.

Thus, in 2016, these features enabled Trump’s presidential campaign to produce and deliver 5.9 million customized Facebook ads targeting 2.5 million people. This was the principal technique used by his campaign to find voters in swing states, Brad Parscale, his 2016 digital strategist and 2020 campaign manager, has repeatedly said. In contrast, Clinton’s campaign had 66,000 ads targeting 8 million people.

Television advertising never offered such specificity. TV ads are created for much wider audiences and thus are far more innocuous. As Emma L. Briant, the British academic and propaganda expert who unmasked the behavioral modification methods deployed on online platforms, noted, these systems can identify traumatized people and target them for messages intended to provoke fragile psyches.

“What they have learned from their [psychologically driven online] campaigns is that if you target certain kinds of people with fear-based messaging—and they know who to go for—that will be most effective,” she said, speaking of past and present Trump campaigns, pro-Brexit forces and others.

“This is not just about Facebook and Facebook data, but about a surveillance infrastructure that has been married together with marketing techniques, with lobbying firms, with intelligence contractors,” she said. “But these firms deploy as P.R. [public relations or campaign] contractors.”

Everything Can Be Traced, Tracked

As 2020’s elections approach, many voters may not realize the extent to which they will be in the digital targeting crosshairs.

The big difference between 2016 and now is that today virtually everything that passes through one’s digital devices—computers, tablets, phones—can be tracked, traced and answered in real time or soon after it appears, by commercially available tools. This level of scrutiny and interaction is not new in cyberspace’s black-market world. But it is now in the tool kits being used in political campaigns to send, track and counter disinformation.

For example, the Dewey Square Group, a Washington political consulting firm allied with Democrats, has a new service called “Dewey Defend.” They scan the fire hose of unfiltered data from the big online platforms to see whether the firm’s clients, such as Black Lives Matter, or client adversaries, such as Blue Lives Matter, are cited or contain key words. What then unfolds is yet another dimension of information warfare in what Silicon Valley has labeled as the “social media listening” space.

Dewey Square says that it can trace a message’s origin back to as early as the tenth time that it appears. That operation can involve piercing efforts to hide or mask the real sender’s identity. It also traces the recipient’s engagement. Was the content deleted, read or shared? And, more critically, who received it? They can identify recipient devices so that countermeasure content can be sent back—via a spectrum of channels and not just a single social media account.

Dewey Square’s tools are at the cutting edge of a growing industry of players specializing in online information warfare. There are other iterations of this level of tracking technology in play, such as the ability of campaigns to create an electronic fence at rallies. Here, unique IDs are pulled off attendees’ smartphones to follow them home. Attendees—via their devices—are subsequently sent messages: anything from donate to get out and vote. Campaigns can also trace their friends, family and contacts.

Security researchers discovered the basis for such spying—called “fingerprinting”—in 2012. Only recently has it been more openly discussed and marketed. If you use the web, your browser sends out information about your device so apps can work and content can appear correctly on the screen. This activity opens a door to online surveillance and information warfare, experts say.

What is new in 2019, however, are the tools and efforts to call out what is happening behind users’ screens—including the range of deceptive communications such as doctored videos; bot-fed traffic; fake personas, pages and groups; and hijacked hashtags.

Today’s cyber detectives will report malfeasance to the platforms, hoping that it will be removed. They will share findings with the journalists to spotlight bad actors. They will give clients addresses of disinformation recipients’ devices to send counter-messages. This is a far bigger battlefield than 2016.

The Democrats’ Strategy

The Democrats are doing other things that they hope will counter disinformation. The DNC has rebuilt its data infrastructure to share information between candidate campaigns and outside groups, said Ken Martin, a DNC vice-chair, president of the Association of State Democratic Party chairs, and Minnesota Democratic-Farmer-Labor Party chairman.

Martin, who helped lead this data redesign, said there are many independent voters in the swing states—perhaps a better term is ambivalent voters—whom party officials will seek to reach. These are people who voted for Trump in 2016, but, come 2018, voted for Democrats, he said. They helped to elect Democratic governors, retake the U.S. House majority, and elect Doug Jones as U.S. senator from Alabama. Martin is hoping to outsmart online micro-targeting—the crux of Trump’s early re-election effort—by relying on what’s called “relational organizing.”

Starting after the 2016 election, activist Democrats in tech circles started creating companies and apps for the party and candidates. Instead of relying on Facebook to identify likely voters at the zip code or district level, the overall idea was that Democrats across the country had friends and family in key contests with real prior relationships.

Democrats are hoping that people will share social media contacts and other lists, which, once analyzed, would identify voters in key locations, Martin explained. This strategy, relying more on peer pressure than online profiling, is seen as being potentially effective with fundraising, organizing and turning out voters.

Martin wants to believe that appeals from “trusted messages and validators” will work. Inside the party, however, there is a debate about using disinformation, he said. In fall 2018, a few tech executives experimented with online disinformation in Jones’ Senate race, raising questions about whether some in the party would do so in 2020.

What Can Voters Do?

The political consulting profession is not known for unilateral disarmament—one side holding back while the other prepares to attack. Any effective tactic tends to be quickly embraced by all sides. By mid-2019, the caustic contours of Trump’s messaging and disinformation were clear.

In the first six months of 2019, Trump’s campaign spent more than $11.1 million on Facebook and Google ads, the platforms reported. Trump put forth thousands of ads attacking factual media as “fake news,” citing an “invasion” at the Mexican border and attacking an unfinished special counsel investigation of 2016 Russian collusion as a “witch hunt.” By November, his campaign has spent more on finding supporters than the top three Democratic candidates combined. As 2020 approaches, the topics in his messages might change, but their tone and overall narrative will not.

The Trump campaign’s common thread is not just to create doubts about Democrats and to demonize critics, but also to reinforce tribal lines. Trump is defending white America against the “undeserving outsiders and the Democrats who represent them,” the New York Times said in early 2019, describing his playbook and its GOP copycats.

By Labor Day, Trump was telling crowds that they have no choice but to vote for him—contending that voting for the Democrat will mean economic collapse. His team knows what they are doing by fearmongering. In recent years, political scientists have noted a trend where voters are both more partisan while more skeptical of their party. “Americans increasingly voted based on their fear and distrust of the other side, not support for their own,” the Times noted in March 2019.

Fear-based politics is not new, but online psychological profiling and micro-targeting are more powerful than ever. Any swing district voter identified as traumatized is likely to be caught in a crossfire. Whether or not people seen as tipping 2020’s election will fall victim to online provocations is the open question. When asked what advice he had for such voters, Martin said, “Do your research.”

Emma Briant, the British academic and propaganda expert, is less optimistic. The platforms are “lawless” and won’t turn off their surveillance and targeted advertising systems, she said, even if top platforms like Facebook are outing foreign influence operations.

But her big worry is that the Democrats will try to match Trump’s shameless disinformation—and civil society will further unravel.

“If you imagine a scenario where both sides decide to weaponize targeting certain kinds of people with fear-based messaging—and they know who to go for—this will cycle out of control,” she said. “We are all being made fearful of each other. There’s no way on earth that we can handle this, psychologically, and deal with the important issues, like climate change, when we are being turned against each other.”

But that is what disinformation is designed to do. And all signs suggest that there will be more of it than ever in 2020 as the presidential election, congressional elections, state races and impeachment proceed.

Steven Rosenfeld is the editor and chief correspondent of Voting Booth, a project of the Independent Media Institute. He has reported for National Public Radio, Marketplace, and Christian Science Monitor Radio, as well as a wide range of progressive publications including Salon, AlterNet, the American Prospect, and many others.

- The Military Oath That Could Preserve Our Democracy - National Memo ›

- New DNC ‘Descent’ Ad Hits Trump’s ‘Four Years Of Failure’ - National Memo ›

- Best-Run Election In Decades — But Most Republicans Distrust The Result - National Memo ›

- Study: How Online Propagandists Targeted The 2020 Election - National Memo ›